Explainable Monitoring

The digital revolution is here but we are not ready for it. Artificial intelligence already automates many processes in the real-world applications, such as train operation or financial fraud detection. It is, however, brittle in novel scenarios and does not allow humans to validate its reasoning. This research will marry formal mathematical reasoning with machine learning and visualization to ensure in real time that the deployed algorithms behave the way human experts expect. Explainable Monitoring will make interpretability an intrinsic feature of formal real-time monitoring. (NWO Veni 2024–2027)

RAIL

Combining formal methods and machine learning to quickly react to changes and disruptions in the rail network, in collaboration with The Dutch Railways (NS), ProRail, and Utrecht University. (NWO 2024–2028)

VeriXAI (2020–ongoing)

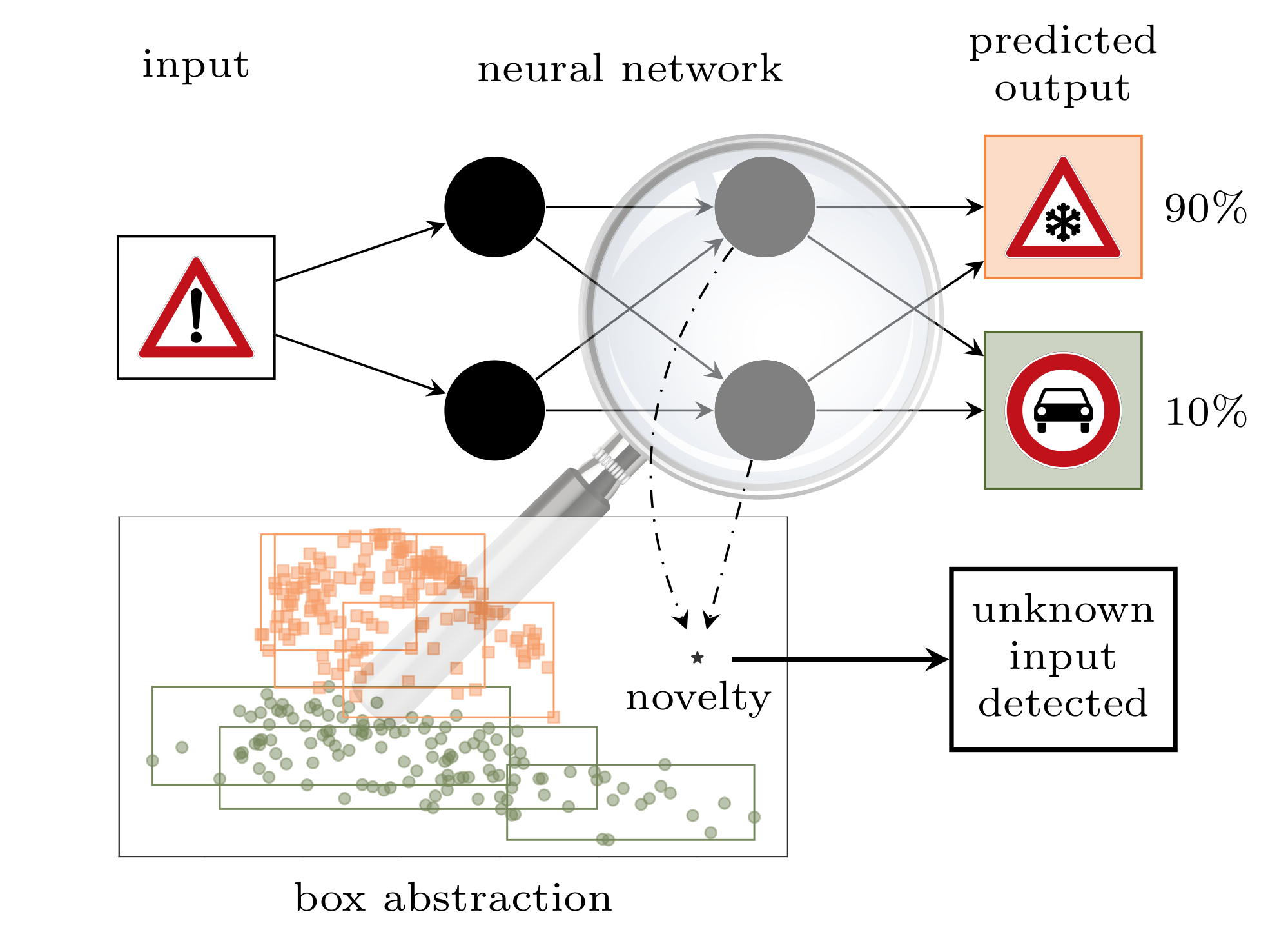

Outside-the-Box

A framework to monitor a neural network by observing the hidden layers.

Verification of Decision Trees

An approach to continuous-time safety verification of decision-tree control policies.

Into the Unknown

A framework to actively monitor a neural-network classifier and automatically adapt to the unknown classes of inputs.

Decision trees (2020–ongoing)

Magic Books

An approach to combining reinforcement learning and decision trees for reliable controller design.

Past Projects

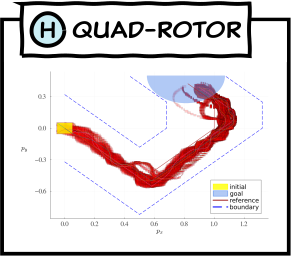

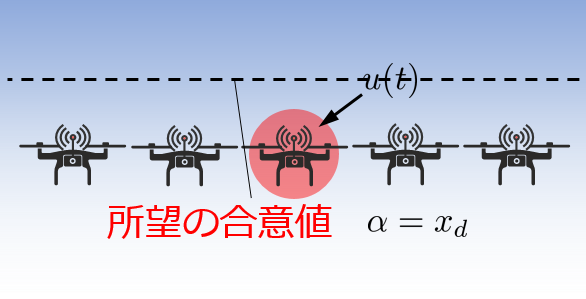

ERATO MMSD (Tokyo, 2018)

Meta-heuristic optimization for drone teams.

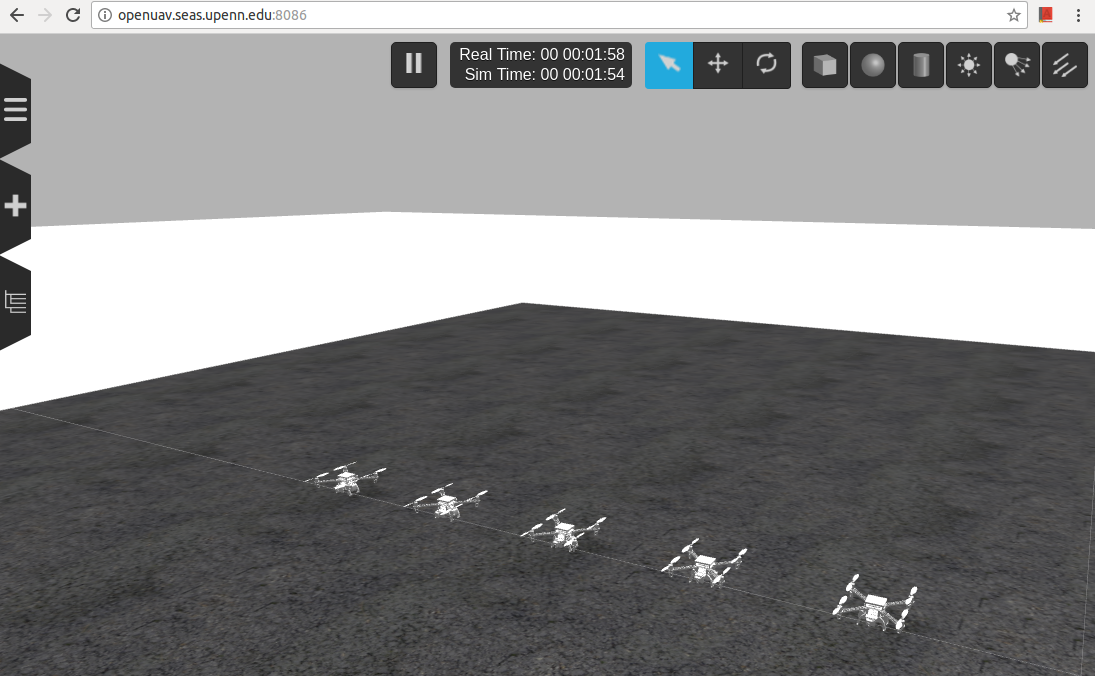

GRASP Lab UPenn (Philadelphia, 2018)

Meta-heuristic optimization for drone teams.

Stony Brook University (2016–2019)

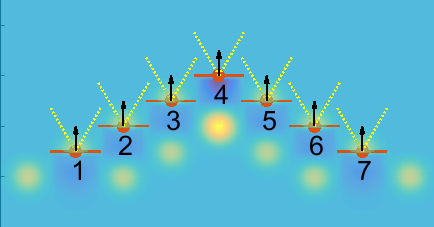

V-formation control

Vienna Ball of Sciences 2019

A 3D projection mapping installation